Table of Contents

Open Table of Contents

Abstract

Task Arithmetic has emerged as a simple yet effective method to merge models without additional training. However, by treating entire networks as flat parameter vectors, it overlooks key structural information and is susceptible to task interference. In this paper, we study task vectors at the layer level, focusing on task layer matrices and their singular value decomposition. In particular, we concentrate on the resulting singular vectors, which we refer to as Task Singular Vectors (TSV). Recognizing that layer task matrices are often low-rank, we propose TSV-Compress (TSV-C), a simple procedure that compresses them to ~10% of their original size while retaining ~99% of accuracy. We further leverage this low-rank space to define a new measure of task interference based on the interaction of singular vectors from different tasks. Building on these findings, we introduce TSV-Merge (TSV-M), a novel model merging approach that combines compression with interference reduction, significantly outperforming existing methods.

Highlights

-

Introduces a spectral perspective on task finetuning of pretrained models, revealing the dominant directions of interference that govern performance changes during model merging.

-

Presents a lightweight procedure for decomposing task vectors into TSVs using singular value decomposition, preserving layer-wise structure and reducing interference, which mitigates negative transfer or task forgetting.

-

Demonstrates consistent performance improvements across all dataset tasks using CLIP ViT Transformers.

-

Provides practical guidance outlining when TSV-based updates outperform classical model merging baselines, without requiring extra training (a.k.a. test-time adaptation) and without introducing unnecessary hyperparameter knobs.

Method Overview

-

Layer-wise SVD & TSVs: For each layer’s task matrix (fine-tuned minus base weights) compute an SVD . Treat the left/right singular vectors and as Task Singular Vectors (TSVs), a structured basis for each task at that layer.

-

Low-rank truncation (TSV-C): Keep only the top-k singular components per task and layer to compress task updates ~10x while preserving ~99% of the original accuracy.

-

Interference scoring (STI): Quantify Singular Task Interference by measuring cross-task overlap of TSVs, via a dot product of and deviations from identity; higher overlap ⇒ more interference.

-

Interference reduction: Decorrelate TSVs across tasks by whitening or orthogonal Procrustes, which are shown to be equivalent transformations, on the concatenated TSVs; this minimizes cross-task interactions before merging.

-

Whitened low-rank merge (TSV-M): Form a block-diagonal matrix of retained singular values, recombine with the whitened TSVs of U and V to obtain a low-rank per-layer update, and then add it (with a global scale ) to the base model to produce the merged multi-task network. To ensure ease of adoption, we recommend using a default value based on extensive empirical testing, which has shown robustness across a variety of tasks.

Experimental Insights

-

TSV-guided merging improves accuracy on standard model-merging benchmarks by over 10%.

-

TSV-C achieves ~99% of the full fine-tuning accuracy while using only a small fraction (~10%-15%) of the parameters, significantly reducing storage requirements (RAM utilization on deployment).

-

Interference visualizations reveal a progression from general to task-specific concepts with depth, aligning with the general knowledge of semantic hierarchy learned by deep networks.

-

Ablations highlight that both compression and orthogonalization are key to reduce interference and improve performance. Results highlight the central role played by the amount of information a model can “store” through the maximum rank of each layer’s task matrix. To delve deeper into this, one hypothesis is that compression alone might be insufficient because it reduces dimensionality without addressing the inherent overlap of task representations. Orthogonalization, on the other hand, systematically uncouples these representations, minimizing cross-task interference. This interplay suggests that while compression reduces storage and preserves essential features, orthogonalization ensures these features maintain their unique identities without interference.

Thoughts

TSV offer an interpretable and efficient mechanism to adapt and merge models, with natural extensions to continual and federated learning. One open question is the precise link between a layer-matrix’s maximum rank and the amount of information it can store. Intuitively, TSV can be seen as a mathematical procedure that, given a set of matrices each with a different rank, retains for each rank dimension the most informative directions up to the available rank, then orthogonalizes them jointly to minimize interference. In this way, the maximum rank of the single-layer matrix, in which we want to store all the information coming from the entire set, and no more than that can be done to save information, and then recombine it without adding interfering directions. This “use-the-rank-you-have” principle suggests that simple tasks, like MNIST, may occupy fewer rank directions than a more complex one, if you do not want to have equal treatment for each task.

A related matrix-rank perspective: for two task matrices A and B of the same layer, one can ask when the rank(A + B) is equal to rank(A) + rank(B). Equality holds under a strong but illustrative condition: both the column spaces and the row spaces are mutually orthogonal, equivalently, so that and , in other words when the intersection between the column space and also the row space of A and B are just the zero vector. This is precisely the scenario TSV-M aims to approximate by whitening/orthogonalizing TSVs before recombination, thereby pushing A and B toward additive, non-interfering contributions within each layer’s rank budget.

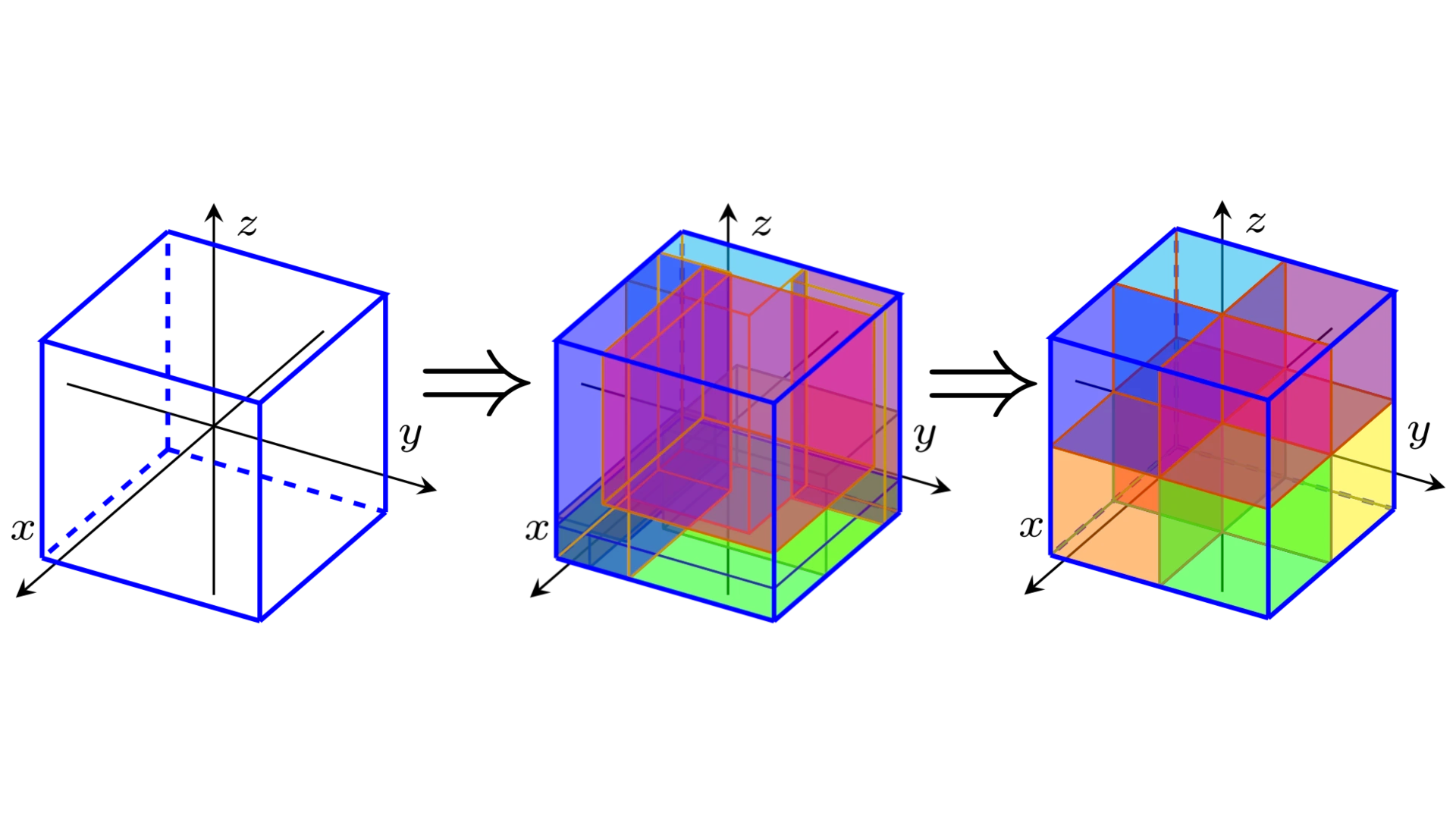

A useful visualization analogy that has helped me to understand this concept is the figure above, where the space of a neural network layer is represented as an empty cube. Each task occupies a subspace within this cube, represented by smaller colored cubes. When two tasks are naively merged, they interfere; their subspaces overlap, leading to conflicts in the shared parameters. By orthogonalizing the TSVs subspaces, we effectively rearrange these smaller cubes so that they fit into the larger cube without overlapping, allowing each task to utilize its own distinct subspace. This visualization helps to conceptualize how TSV-M reduces interference and maximizes the effective use of the available parameter space; but also open an interesting question about the effective usage of knowledge by these networks, in my opinion, in this weay, we are not merging the networks the way we have intended from beginning: fuse the knowledge to get a better understanding of the entire world. Here we are just fusing more networks togheter, each one specialized in a small task, without really to get a better and general understanding of all the concepts together. This is a huge limitation point in my opinion and I do not have a clear idea on how and when we will be able to effectively resolve this issue, or if this research direction is telling us that a neural network can only store information in separate subspace (circuits, modules, or pathways) without really being able to find useful intersections between different tasks.